Are you looking for ways to keep Google from indexing your website? Search engine indexing of your website is essential for it to be found in search engine results pages. However, there might be times when you want to ensure that Google doesn’t index a particular page on your website. De-indexing a page or an entire website from Google is a relatively easy process.

You can keep Google from indexing your website or its specific pages by using the “noindex” meta tag, Robots.txt file, X-Robots-Tag HTTP Header, or Google Search Console.

To know more about the process and how to use these methods, read this article till the end. We’ll explain the different ways in detail and teach you how to use them to prevent Google from indexing your website.

How Can I Keep Google From Indexing My Website?

Following are some particular ways to keep Google from indexing the pages of your website. Those ways are:

1. Use A “Noindex” Meta Tag

Using the “noindex” Meta tag is the most effective way of keeping Google away from indexing pages of your website. This Meta tag is a directive through which the search engine crawlers get to learn not to index the web page. The search engine subsequently will not show the non-index pages in the search results.

Google supports the “noindex” rule set with either the HTTP response header or <meta> tag. Therefore using it, you can prevent indexing your site’s content by search engines. Google Bot extracts the header or tag by crawling the pages.

Then from the Google Search results, Google will drop that page with the “noindex” meta tag. While descending a page, Google doesn’t consider whether other sites are linked to it or not.

If you cannot get root access to your server, using “noindex” is helpful for you. Using it allows you to control your site’s access page-by-page.

How To Use The “noindex” Meta tag?

To use this Meta tag, you must insert a particular tag in the <Head> section in HTML markup on a page. That tag is <meta name = “robots” content = “noindex”>.

Whether inserting this meta tag is accessible or not depends on your CMS (Content Management System). However, sometimes CMSs like WordPress don’t permit the users to access the source code. In this case, you can use the Yoast SEO-type plugin. If you wish to de-index every page, you need to insert this meta tag to each page.

Sometimes the website owners want the search engines to de-index their web pages and not to follow that page’s link. In this case, you need to insert two meta tags, including “noindex” and “nofollow” tags. Follow the example below:

<meta name = “robots” content = “noindex, nofollow”>

2. Use A Robots.TXT File

Mainly using the robots.txt file, you can tell the search engines which pages of your site are accessible or which not. However, the aim of this type of text file is not to hide the pages of your website from search engines.

Instead, this file is used to prevent videos, images, and other media files from search results appearance.

How To Use Robots.txt file?

To use the robots.txt file, you need to have technical knowledge. You need to create the UTF-8 or standard ASCII text file using the text editor. And after making that file, add it to your website’s root folder.

However, you can check our guide on how to write the robots.txt file to learn the details. Google has generated a separate guide to hide images and videos from appearing in search results.

3. Use An X-Robots-Tag HTTP Header

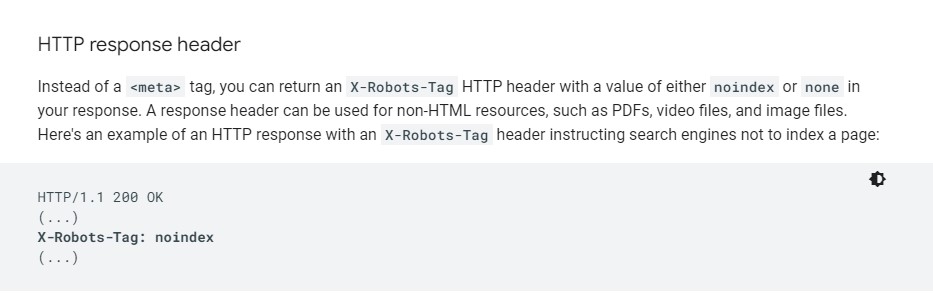

Another way to prevent Google from indexing your site is using the X-robots-tag HTTP header. You can add this tag to your given URL’s HTTP response header. The “noindex” tag and the HTTP Header have the same effect.

But the additional option with this HTTP header is to specify the conditions of numerous search engines. Search engines come with the X-Robots-Tag HTTP header to make your meta robots tag process easier. Through this, you can specify an HTTP header named “X-Robots-Tag.”

Also you can set the value equivalent to the meta robots tags value. However, one benefit of this method is that it can de-index your entire site.

How To Use The X-Robots-Tag?

Finding and editing the HTTP response header can be tricky based on which web browser you use. For example, you can use the Modify Header Value or ModHeader type developer tools in Google Chrome to de-index pages.

For specific functions, the example of using X-Robots-Tag is as follows:

- To de-index the web page: X-Robots-Tag: noindex

- For numerous search engines to set various de-indexing rules: X-Robots-Tag: Googlebot: Nofollow or X-Robots-Tag: Otherbot: noindex, nofollow

4. Use The Webmaster Tools Of Google

You can temporarily block pages from the Google search results by using the ‘Remove URLs” tool of Google Webmaster. This way of preventing indexing is only applicable to the Google search engine.

Other search engines have their particular tools. However, using this tool, you can temporarily remove the URLs or block pages.

How to Use Google Webmaster Tool?

Using the Google Webmaster tool is relatively easy. First off, open the option “Remove URLs Tool.” Then select your property in Search Console.

Afterward, enter the URL of the page by selecting the option “Temporarily Hide.” Then select “Clear URL from Cache & Temporarily Remove From Search.”

After using this tool, for the next 90 days, this tool will hide your selected web pages from the Google search results. And also it will clear the page’s cached copy and Google index snippets.

Which Web Pages Of Your Site Don’t Need To Index On Google?

Search engines don’t need to index every web page on your site. Typically indexing some particular web pages has no use. Some of these pages are:

- Thank You Pages

- Admin Pages

- Landing Pages for Ads

- Policy and Privacy pages

- Low-value pages

- Duplicate pages

We recommend you thoroughly audit your website’s content in a systematic approach before de-indexing. Auditing will help you to determine which pages you should exclude or include.

Why Do You Need To Prevent Google From Indexing Your Website or Particular Pages?

There are very few reasons why you should prevent Google from indexing the pages on your site. But still, there are some causes of it, and those are:

1. Improve The Goal Attribution And Tracking Of Your Site

For many marketers and webmasters, the “Thank You” page visit contributes to the form completion goals. The goals and goal conversion rate become inaccurate if users fill out your forms and also organic traffic lands on your page.

Therefore, to prevent organic traffic from landing on your “Thank You” page, you need to de-index this page entirely.

2. Prevent The Duplicate Content Issues

Sometimes, Google makes some unnecessary decisions due to publishing identical or nearly identical pages on your site. Having duplicate pages of another page on the internet makes Google confused. The search engine fails to determine which one is original.

Sometimes it happens that you published one page first. But the identical pages that you published later have more authoritative sources. Now when your website contains both pages, which one will Google include in its search results? It results in a confusing outcome.

Therefore, you must prevent Google from crawling your site’s certain pages to avoid duplicate content issues.

3. Reduce Pages On Your Site With No User Value

You can prevent the spreading of your site’s too-thin SEO value by disallowing the search engines from indexing a page. By hiding your website’s pages with no user value, you can increase the overall value of your site on search engines.

Google will only learn about your website’s relevant and useful pages that deserve to be found.

Frequently Asked Questions:

How long does it take for Google to stop indexing a site?

How long Google takes to de-index a site varies. It can take a little time, like four days, or an enormous time, like six months.

What happens if you turn off indexing?

Turning off indexing the pages of your website will increase the time it takes for different apps or windows to return search results.

When should you avoid indexing?

It would be best if you avoided indexing for small tables or tables with frequent updates or pages with low values.

Final Words

After sending the de-indexing request, Google takes time to receive and implement your request. To bring the change, sometimes the search engine may take a few weeks. But don’t get frustrated. Following the ways mentioned above, you can keep Google away from indexing your website.

Hopefully, this article has been helpful enough. Now you have got the answer to your question, “How can I keep Google from indexing my website?”

However, we have explained everything that you need to know. If you have any confusion, you can comment in the comment section. Or you can contact us, our team will soon reply to you!